|

Public Types |

| enum | RunModeType { FUZZY,

DEFAULT,

IC,

DISTRIB

} |

| | The available ARTMAP models (See main page for differences between models). More...

|

Public Member Functions |

| | artmap (int M, int L) |

| | Artmap class constructor - Allocates internal arrays, sets initial values.

|

| | ~artmap () |

| | Artmap class destructor - frees array storage.

|

| void | train (float *a, int K) |

| | Trains the artmap network: Given input vector a, predict target class K.

|

| void | test (float *a) |

| | Tests a trained artmap network: given an input a, sets  (retrieved via getOutput()). (retrieved via getOutput()).

|

| float | getOutput (int k) |

| | Returns the k-th output (distributed prediction).

|

| int | getMaxOutputIndex () |

| | Returns the index of the largest output prediction, which in a winner-take-all situation (fuzzy ARTMAP) is the predicted class.

|

| void | fwrite (ofstream &ofs) |

| | Writes out all the persistent data describing a trained ARTMAP network.

|

| void | fread (ifstream &ifs, string &specialRequest) |

| | Loads a trained ARTMAP network from a file.

|

| void | setParam (const string &name, const string &value) |

| | Provides a string-based interface for setting ARTMAP parameters.

|

| int | getC () |

| | Returns the number of category nodes (aka templates learned by the network).

|

| int | getNodeClass (int j) |

| | Returns the output class associated with a category node with the given index.

|

| int | getLtmRequired () |

| | Returns the number of bytes required to store the weights for the network.

|

| float & | tauIj (int i, int j) |

| | Accessor method for retrieving the model's bottom-up weights ( values). values).

|

| float & | tauJi (int i, int j) |

| | Accessor method for retrieving the model's top-down ( values). values).

|

| int | getOutputType (const string &name) |

| | Given the name of an output request, returns a number specifying the type of the output (at the moment, all outputs are of type int).

|

| int | getInt (const string &name) |

| | Returns the value of the specified item as an integer.

|

| float | getFloat (const string &name) |

| | Returns the value of the specified item as a floating point number.

|

| string & | getString (const string &name) |

| | Returns the value of the specified item as a string.

|

| void | requestOutput (const string &name, ofstream *ost) |

| | Registers a request for an output to be directed to a file.

|

| void | closeStreams () |

| | Closes any streams that were passed during a requestOutput() call.

|

| void | setNetworkType (RunModeType v) |

| | Accessor method.

|

| void | setM (int v) |

| | Accessor method.

|

| void | setL (int v) |

| | Accessor method.

|

| void | setRhoBar (float v) |

| | Accessor method.

|

| void | setRhoBarTest (float v) |

| | Accessor method.

|

| void | setAlpha (float v) |

| | Accessor method.

|

| void | setBeta (float v) |

| | Accessor method.

|

| void | setEps (float v) |

| | Accessor method.

|

| void | setP (float v) |

| | Accessor method.

|

| RunModeType | getNetworkType () |

| | Accessor method.

|

| int | getM () |

| | Accessor method.

|

| int | getL () |

| | Accessor method.

|

| float | getRhoBar () |

| | Accessor method.

|

| float | getRhoBarTest () |

| | Accessor method.

|

| float | getAlpha () |

| | Accessor method.

|

| float | getBeta () |

| | Accessor method.

|

| float | getEps () |

| | Accessor method.

|

| float | getP () |

| | Accessor method.

|

Private Member Functions |

| void | complementCode (float *a) |

| | Complement-codes the input vector.

|

| int | F0_to_F2_signal () |

| | Computes the signal function from the F0 to the F2 layer, for each of the C category nodes.

|

| void | newNode () |

| | Adds a new node to the F2 layer.

|

| void | CAM_distrib () |

| | Implements the Increased-Gradient Content-Addressable-Memory (CAM) model.

|

| void | CAM_WTA () |

| | Implements the Winner-Take-All (WTA) Content-Addressable-Memory (CAM) model.

|

| void | F1signal_WTA () |

| | Propagates a WTA signal  from the from the  to the to the  layer. layer.

|

| void | F1signal_distrib () |

| | Propagates a distributed signal  from the from the  to the to the  layer. layer.

|

| bool | passesVigilance () |

| | Implements the vigilance criterion, testing that the match is good enough.

|

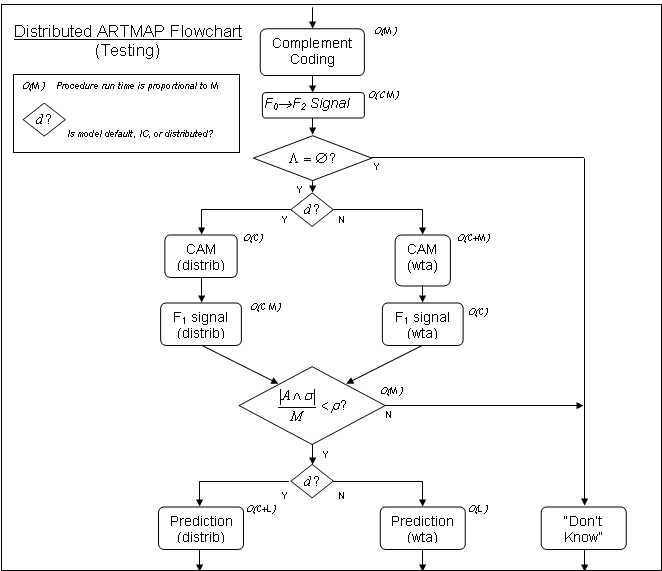

| int | prediction_distrib () |

| | Propagates a distributed signal from  to to  . .

|

| int | prediction_WTA () |

| | Propagates a signal from the winning  node node  to to  . .

|

| void | matchTracking () |

| | In response to a predictive mismatch, raises vigilance so next choice is more conservative.

|

| void | creditAssignment () |

| | Adjusts activations prior to resonance (distributed mode only), so that nodes making the wrong prediction aren't allowed to learn.

|

| void | resonance_distrib () |

| | When a successful prediction has been made, adjust the long-term memory weights to accomodate the newly matched sample (distributed version).

|

| void | resonance_WTA () |

| | When a successful prediction has been made, adjust the long-term memory weights to accomodate the newly matched sample (WTA version).

|

| void | growF2 (float factor) |

| | Increase the pool of nodes available for the  layer. layer.

|

| float | cost (float x) |

| | This cost function takes the input signal  to an F2 node, and rescales the metric so that nodes that match the training/test sample being evaluated well have low cost. to an F2 node, and rescales the metric so that nodes that match the training/test sample being evaluated well have low cost.

|

| void | toStr () |

| | Logs all the ARTMAP network details.

|

| void | toStr_dimensions () |

| | Logs the network's dimensions.

|

| void | toStr_A () |

| | Logs the activations of the F1 node field, that is, the complement-coded input vector.

|

| void | toStr_nodeJTSH (int j) |

| | Logs the F2 input signal, along with its tonic and phasic components (H =  ). ).

|

| void | toStr_nodeJdetails (int j) |

| | Logs the F2 node activation (pre/post normalization = y/Y), and the class to which the node maps.

|

| void | toStr_nodeJtauIj (int j) |

| | Logs the Jth node's bottom-up thresholds ( ). ).

|

| void | toStr_nodeJtauJi (int j) |

| | Logs the Jth node's top-down thresholds ( ). ).

|

| void | toStr_x () |

| | Logs the match field activations.

|

| void | toStr_sigma_i () |

| | Logs the F3->F1 signal  . .

|

| void | toStr_sigma_k () |

| | Logs the output prediction  . .

|

Private Attributes |

| RunModeType | NetworkType |

| | Controls the algorithm used.

|

| int | M |

| | Number of inputs (before complement-coding).

|

| int | L |

| | Number of output classes ([1-L], not [0-(L-1)]).

|

| float | RhoBar |

| | Baseline vigilance - training.

|

| float | RhoBarTest |

| | Baseline vigilance - testing.

|

| float | Alpha |

| | Signal rule parameter.

|

| float | Beta |

| | Learning rate.

|

| float | Eps |

| | Match tracking parameter.

|

| float | P |

| | CAM rule power parameter.

|

| int | C |

| | Number of committed nodes.

|

| int | J |

| | In WTA mode, index of the winning node.

|

| int | K |

| | The target class (1-L, not 0-(L-1)).

|

| float | rho |

| | Current vigilance.

|

| float * | A |

| | Index ranges - i: 1-M, j: 1-C; k: 1-L Indexed by i - Complement-coded input.

|

| float * | x |

| | Indexed by i - F1, matching.

|

| float * | y |

| | Indexed by j - F2, coding.

|

| float * | Y |

| | Indexed by j - F3, counting.

|

| float * | T |

| | Indexed by j - Total F0->F2.

|

| float * | S |

| | Indexed by j - Phasic F0->F2.

|

| float * | H |

| | Indexed by j - Tonic F0->F2 (Capital Theta).

|

| float * | c |

| | Indexed by j - F2->F3.

|

| bool * | lambda |

| | Indexed by j - T if node is eligible, F otherwise.

|

| float * | sigma_i |

| | Indexed by i - F3->F1.

|

| float * | sigma_k |

| | Indexed by k - F3->F0ab.

|

| int * | kap |

| | Indexed by j - F3->Fab (small kappa).

|

| float * | dKap |

| | Distributed version of kap.

|

| float * | tIj |

| | Indexed by i&j - F0->F2 (tau sub ij).

|

| float * | tJi |

| | Indexed by j&i - F3->F1 (tau sub ji).

|

| bool | dMapWeights |

| | if true, use dKap, else use kap

|

| float | Tu |

| | Uncommitted node activation.

|

| float | sum_x |

| | To avoid recomputing norm.

|

| int | _2M |

| | To keep from repeatedly calculating 2*M.

|

| int | N |

| | Growable upper bound on coding nodes.

|

| int | i |

| int | j |

| int | k |

| | Indices i, j and k, so we don't have to declare 'em everywhere.

|

| ofstream * | ostCategoryActivations |

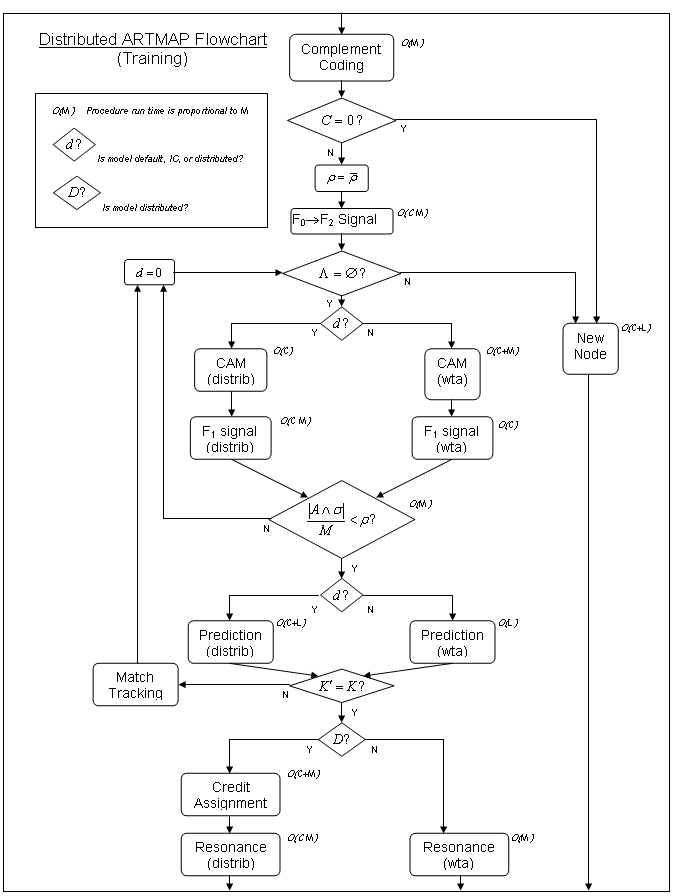

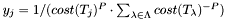

Depending on the NetworkType setting, can emulate fuzzy ARTMAP, Default ARTMAP, or the instance counting and distributed varieties. A flowchart of the training process is shown below:

(retrieved via getOutput()).

(retrieved via getOutput()).  values).

values).  values).

values).  from the

from the  to the

to the  layer.

layer.  from the

from the  to the

to the  layer.

layer.  to

to  .

.  node

node  to

to  .

.  layer.

layer.  to an F2 node, and rescales the metric so that nodes that match the training/test sample being evaluated well have low cost.

to an F2 node, and rescales the metric so that nodes that match the training/test sample being evaluated well have low cost.  ).

).  ).

).  ).

).  .

.  .

.

are eligible for the competition. The computation is based on the

are eligible for the competition. The computation is based on the  in the distributed ARTMAP paper). Any such nodes (there may be overlapping point boxes as the winners) receive all the activation, which they split equally. In other words, in this case the activation will not be distributed to the rest of the F2 layer nodes.

in the distributed ARTMAP paper). Any such nodes (there may be overlapping point boxes as the winners) receive all the activation, which they split equally. In other words, in this case the activation will not be distributed to the rest of the F2 layer nodes.

in the previous equation serves to tune the contrast in the resulting activation gradient across nodes, hence the name 'increased-gradient' for this CAM.

in the previous equation serves to tune the contrast in the resulting activation gradient across nodes, hence the name 'increased-gradient' for this CAM. , which gives extra weight to those nodes that have more 'experience', i.e., they have been associated with more training samples.

, which gives extra weight to those nodes that have more 'experience', i.e., they have been associated with more training samples. value is set to 1, and that of all the other nodes is set to 0.

value is set to 1, and that of all the other nodes is set to 0. and

and  .

.  , which corresponds to the training/test sample falling within a point category box.

, which corresponds to the training/test sample falling within a point category box.  )

) ) are normalized.

) are normalized. is added to the set

is added to the set  , as used in the Distributed ARTMAP paper. Specifically, it represents those nodes that are both more active than the uncommitted node baseline activation, and those that have not yet been reset. In other words, it represents those nodes that are still eligible to encode the input. This change in meaning simplifies the algorithm and enhances the efficiency of the implementation, which only has to check a single set for node eligibility, rather than two. The value

, as used in the Distributed ARTMAP paper. Specifically, it represents those nodes that are both more active than the uncommitted node baseline activation, and those that have not yet been reset. In other words, it represents those nodes that are still eligible to encode the input. This change in meaning simplifies the algorithm and enhances the efficiency of the implementation, which only has to check a single set for node eligibility, rather than two. The value ![$\sigma_i=\sum_{j=0}^{C-1}{[Y_j-\tau_{ji}]}^+$](form_14.png) , sending from each

, sending from each  to each

to each  whatever part of the activation level

whatever part of the activation level  . Note that using default ARTMAP notation, this is equivalent to

. Note that using default ARTMAP notation, this is equivalent to  .

. . Also note that the

. Also note that the  ) is raised to

) is raised to ![$[(\sum{|A_i\wedge\sigma_i|})/M]+\epsilon$](form_34.png) .

. holds.

holds. . This was in response to a bug in which a newly committed node did not satisfy the vigilance criterion when it was set to maximum (1.0), as

. This was in response to a bug in which a newly committed node did not satisfy the vigilance criterion when it was set to maximum (1.0), as  was infinitesimaly smaller than

was infinitesimaly smaller than  . The value

. The value  .

.![\[\sigma_k=\sum_{j=0}^{C-1}Y_j\]](form_28.png)

for which

for which  for some

for some  , the index of the predicted output class, to the index of the largest

, the index of the predicted output class, to the index of the largest  , the index of the predicted output class

, the index of the predicted output class![\[\tau_{ij}=\tau_{ij}^{old}+\beta[y_j-\tau_{ij}^{old}-A_i]^+\]](form_45.png)

![\[\tau_{ji}=\tau_{ji}^{old}+\frac{\beta[y_j-\tau_{ji}^{old}-A_i]^+}{\sigma_i}\]](form_47.png)

.

.![$\tau_{iJ}=\tau_{Ji}=\tau_{iJ}^{old}+\beta[1-\tau_{iJ}^{old}-A_i]^+$](form_38.png) , and the node's instance count is incremented:

, and the node's instance count is incremented:  .

.

, which is set in

, which is set in  ” in the flowchart.

” in the flowchart. 1.4.3

1.4.3